Introducing Browsertrix Crawler

I wanted to more publicly announce Webrecorder's new automated browser-based crawling system: Browsertrix Crawler.

I wanted to more publicly announce Webrecorder’s new automated browser-based crawling system: Browsertrix Crawler.

The premise of the crawler is simple: to run a single command that produces a high-fidelity crawl (based on the specified params and config options).

The Browsertrix Crawler is a self-contained, single Docker image that can run a full browser-based crawl, using Puppeteer.

The Docker image contains pywb, a recent version of Chrome, Puppeteer and a customizable JavaScript ‘driver’.

The crawler is currently designed to run a single-site crawl using one more Chrome browser in parallel, and capturing data via a pywb proxy.

The default driver simply loads a page, waits for it to load and extracts links using Puppeteer and provided crawler interfaces. A more complex driver could perform other custom operations target at a specific site fully customizing the crawling process.

The output of the crawler is WARC files and optionally, a WACZ file.

The goal is to make it as easy as possible to run a browser-based crawl on the command line, for example (using Docker Compose for simplicity):

docker-compose run crawler crawl --url https://netpreserve.org/ --collection my-crawl --workers 3 --generateWACZAfter running the crawler:

-

a WACZ file will be available for use with ReplayWeb.page at

./crawls/collections/my-crawl.wacz -

the WARC files will also be available (in standard pywb directory layout) in:

./crawls/collections/my-crawl/archive/ -

the CDX index files will be available (in standard pywb directory layout) in:

./crawls/collections/my-crawl/indexes/

Currently, Browsertrix Crawler supports a number of command line options and a more extensive crawl config is coming.

Browsertrix Crawler represents a refactoring of the all-in-one Browsertrix system into a modular, easy-to-use crawler.

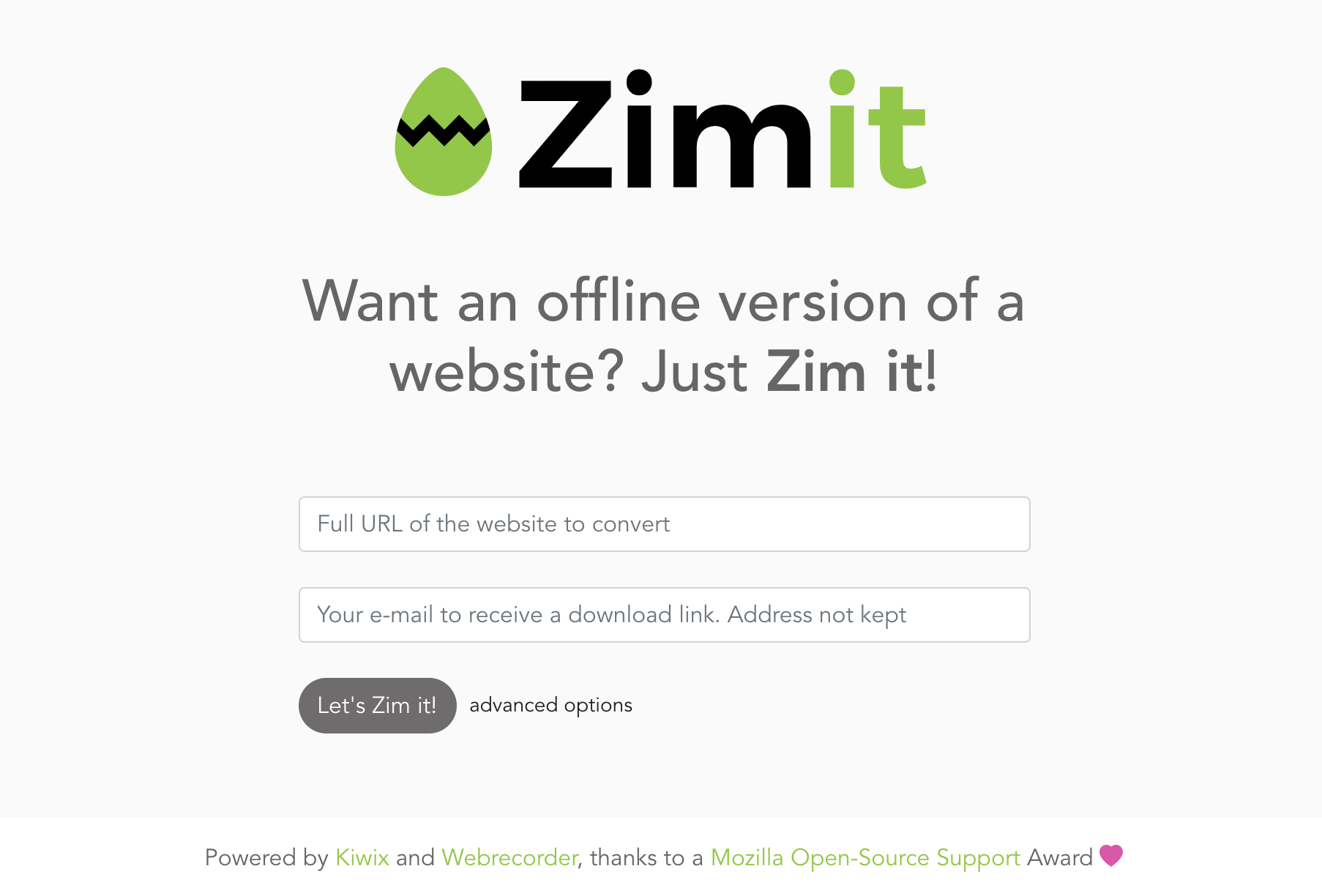

Use Case: Zimit Project

The development of Browsertrix Crawler was initially created in collaboration with Kiwix to support their brand new crawling system, Zimit.

The system is publicly available at: https://youzim.it.

Kiwix is a non-profit producing customized archives for use primarily in offline environments, and open source tools to view these custom archives on a variety of mobile and desktop platforms.

Kiwix’s core focus includes producing archived copies of Wikipedia. Kiwix maintains an existing Docker-based crawling system called “ZIM Farm” that runs each crawl in a single Docker container. To support this existing infrastructure, Browsertrix Crawler was architected to run a full crawl in a single Docker container. This versatile design makes Browsertrix Crawler easy to use as a standalone tool and adaptable to other environments.

The Zimit system produces web archives in the ZIM format, the core format developed by Kiwix for their offline viewers and the basis of their downloadable archives. ZIM files created by Zimit include a custom version of wabac.js service worker, which also powers ReplayWeb.page. Support for loading ZIM files created via Zimit is being further developed by Kiwix for all of their offline players.

To stay up-to-date with the Zimit project, you can follow it on GitHub at: https://github.com/openzim/zimit.

The development of the Zimit system was supported in part by a grant from the Mozilla Foundation. Webrecorder wishes to thank Kiwix, and indirectly, Mozilla, for their support in creating Browsertrix Crawler.