Archive, Curate, and Share the Web.

High-fidelity crawling, innovative quality assurance, and collaborative organization empower you to preserve, curate, and share archived web content with confidence.

Built and run by Webrecorder since 2021.

7-day free trial of the Starter plan.

Powerful, Observable, Automated Archiving

Archive dynamic websites

Create an interactive copy of any website! Capture a website exactly as you see it now, or schedule crawls at regular intervals to capture a site as it evolves.

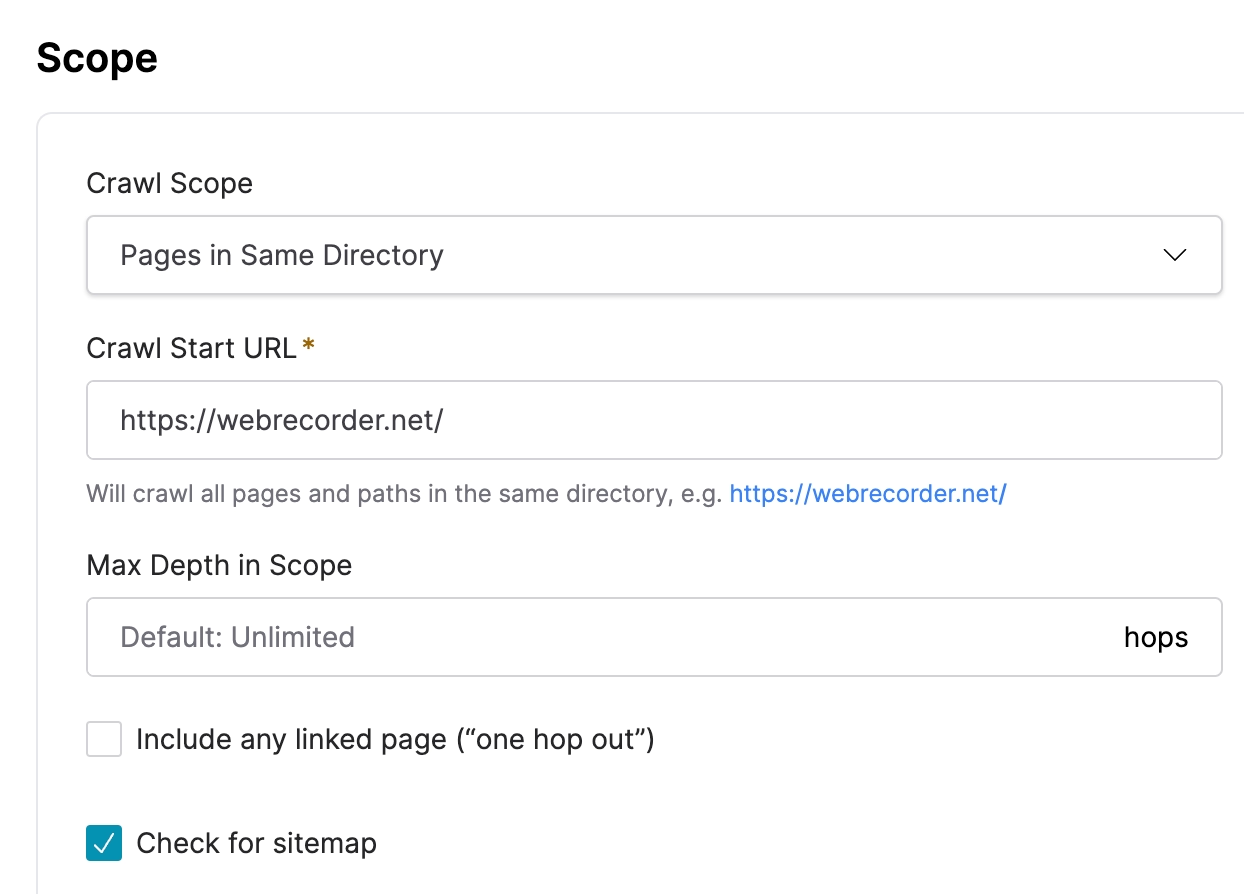

Intuitive and granular configuration options enable you to set up and run your crawls with ease.

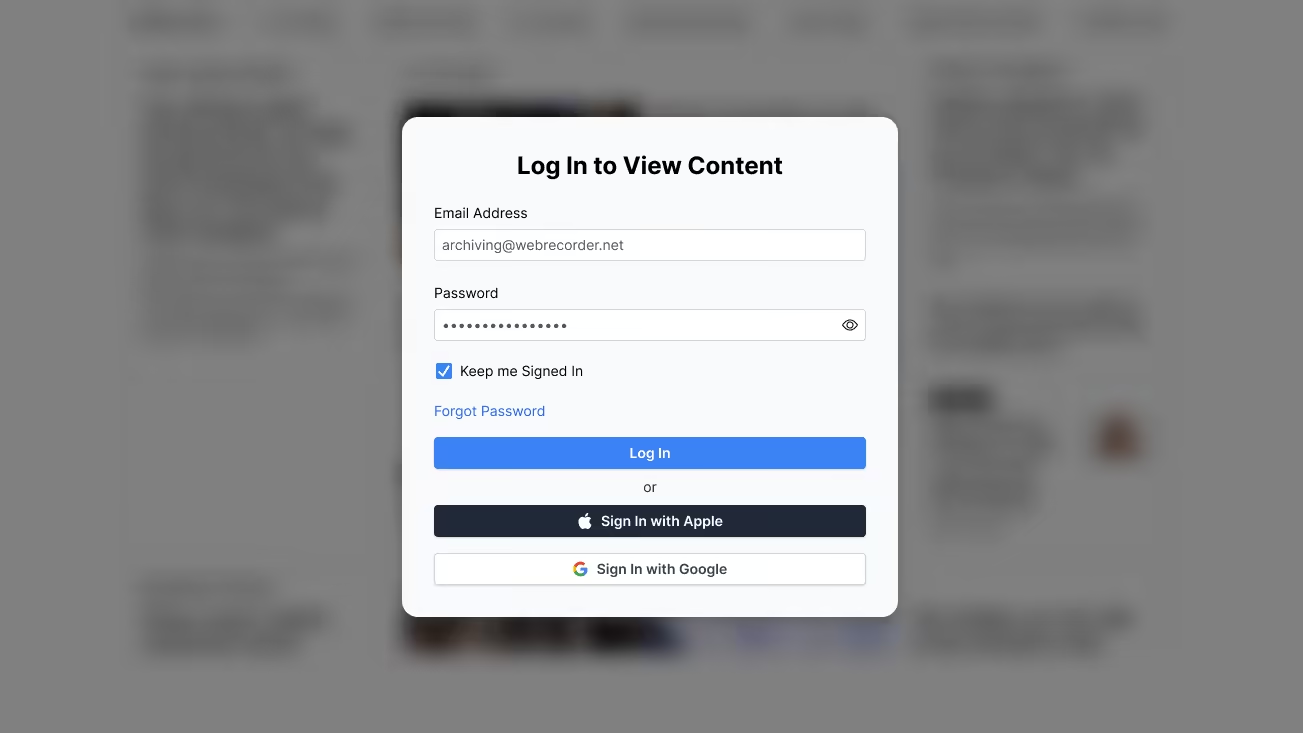

Preserve social media & paywalled content

Get behind paywalls and capture content behind logins.

Browsertrix’s innovative crawling system uses real browsers. Login sessions, cookies, and browser preferences (like ad-blocking and language preferences) allow you to crawl the web like a real user.

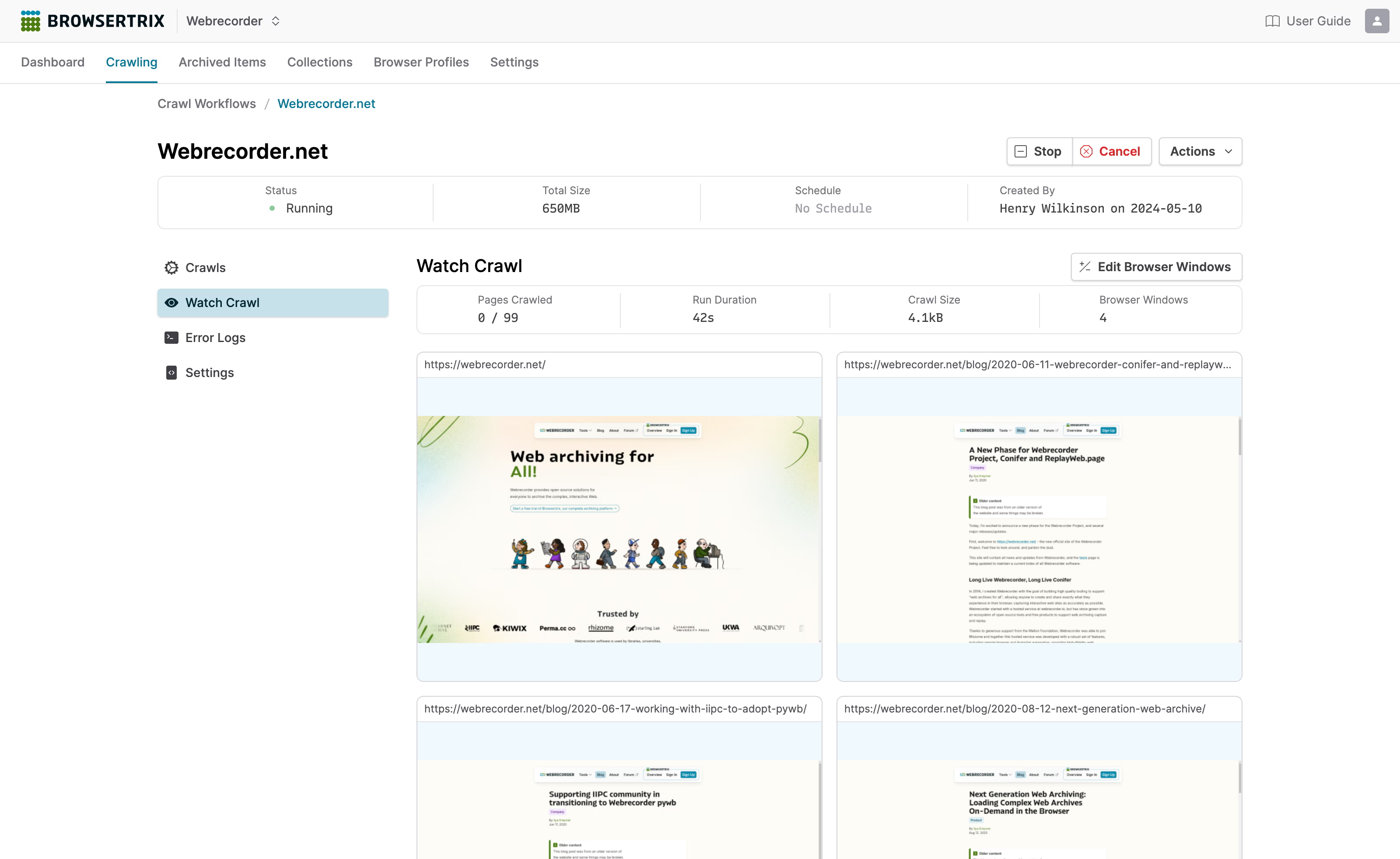

Watch crawls and exclude content in real time

Monitor running crawls in real-time. Diagnose issues and ensure you are capturing exactly the content you want.

Exclude URLs without restarting a crawl to keep from getting bogged down in crawler traps, such as websites that dynamically generate new URLs.

Signed, sealed, authenticated

Always on schedule

World traveler

Available on Pro plans

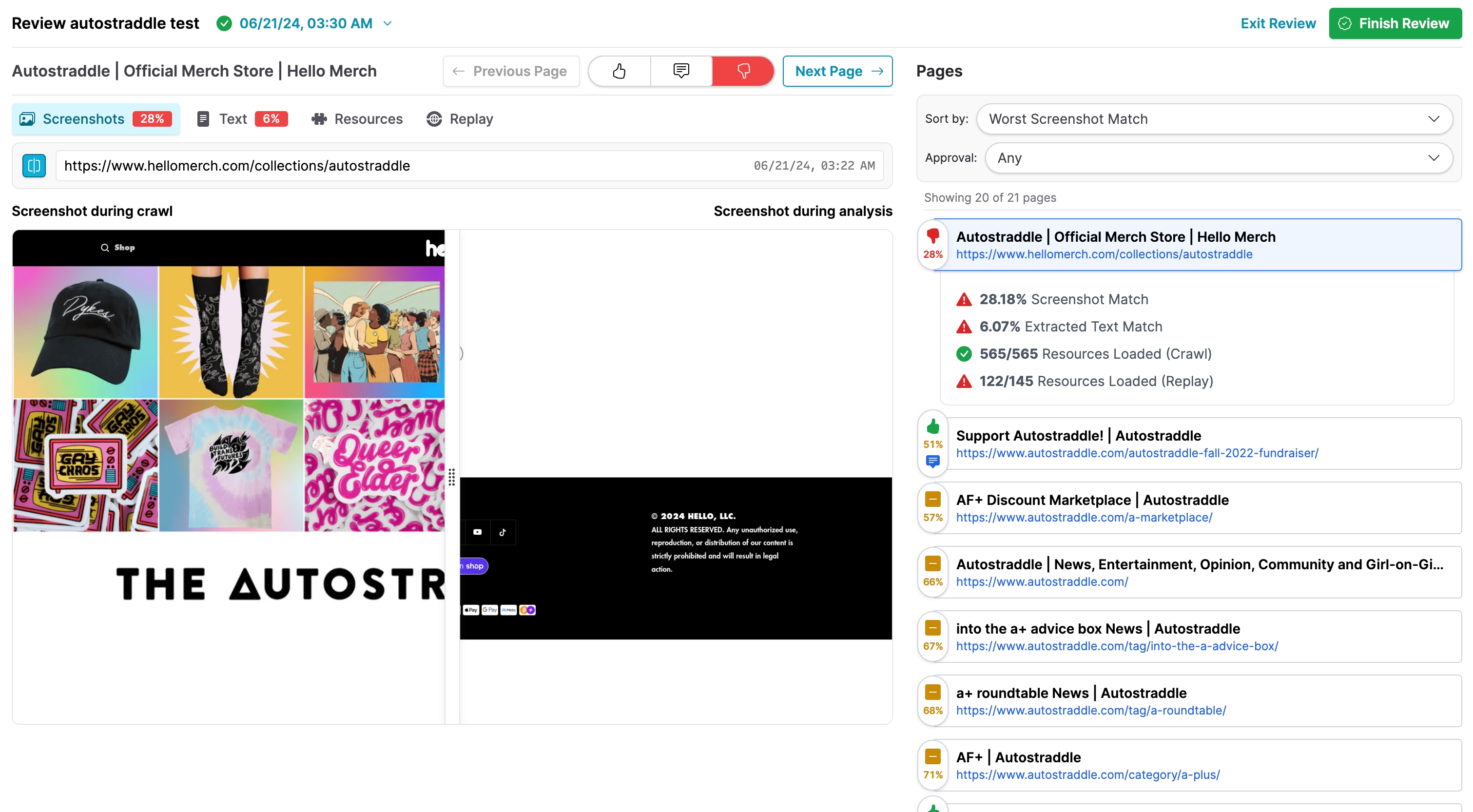

Industry-Leading Quality Assurance Tools

Automatically analyze capture success

Get a better picture of the quality of your crawl. Run crawl analysis to compare screenshots, extracted text, and other page information from your crawl output with data gathered while crawling the live site.

Analyze crawl qualityCollaboratively review key pages

Assess results and give your team a better idea of a crawl’s overall success with Browsertrix’s dedicated review interface.

Problematic pages are highlighted for ease of navigation. Mark key pages as successful or unsuccessful and leave comments for other curators.

Review crawl quality

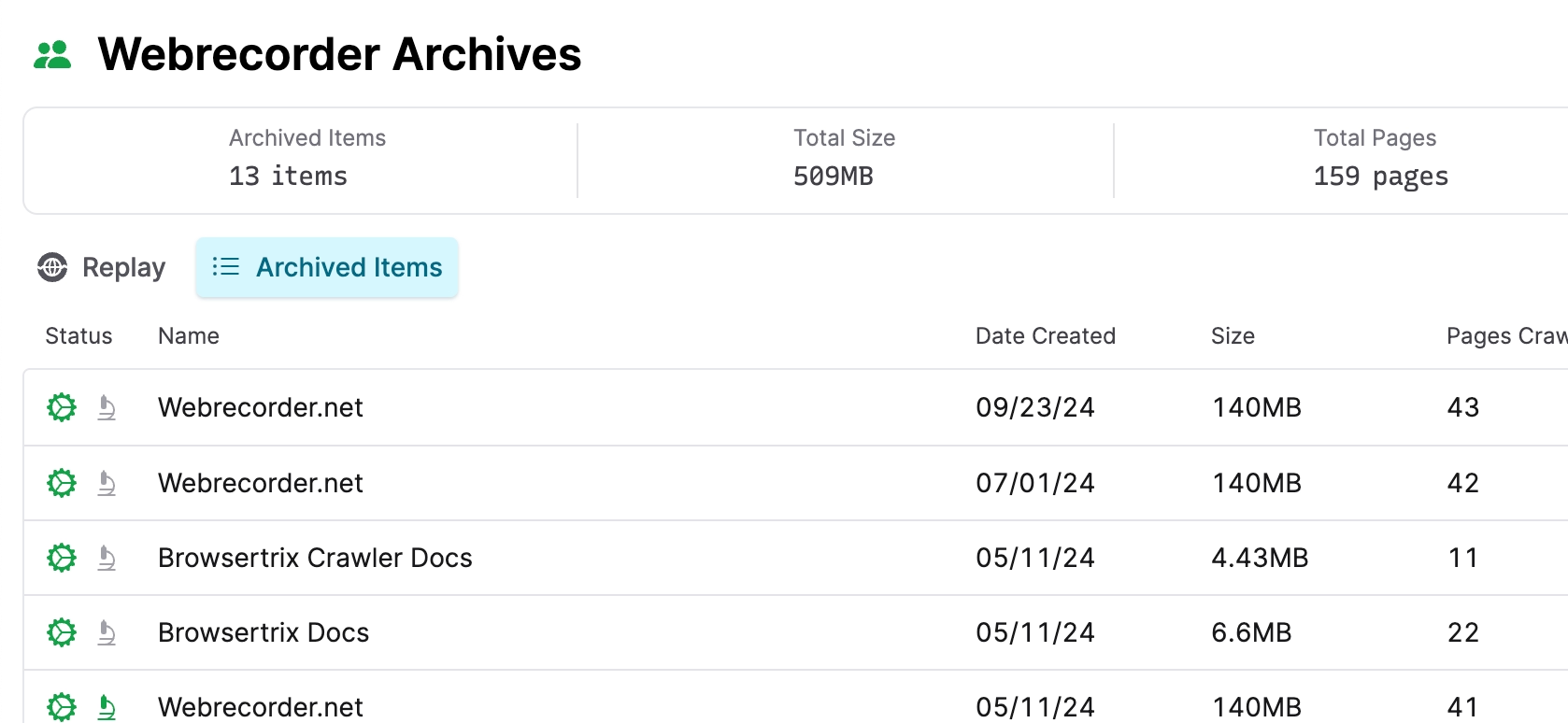

Instantly download crawls

Share curatorial notes

Integrate manual captures

Curated, Shareable Web Archives

Organize and contextualize your archived content

Merge outputs from different crawls into a single collection. Add and track collection metadata together with your team.

Extend your collection by importing content from supported web archive formats, such as WACZ files generated by ArchiveWeb.page.

Showcase your collections

Collections are private by default, but can be added to your organization’s public gallery to share with the world

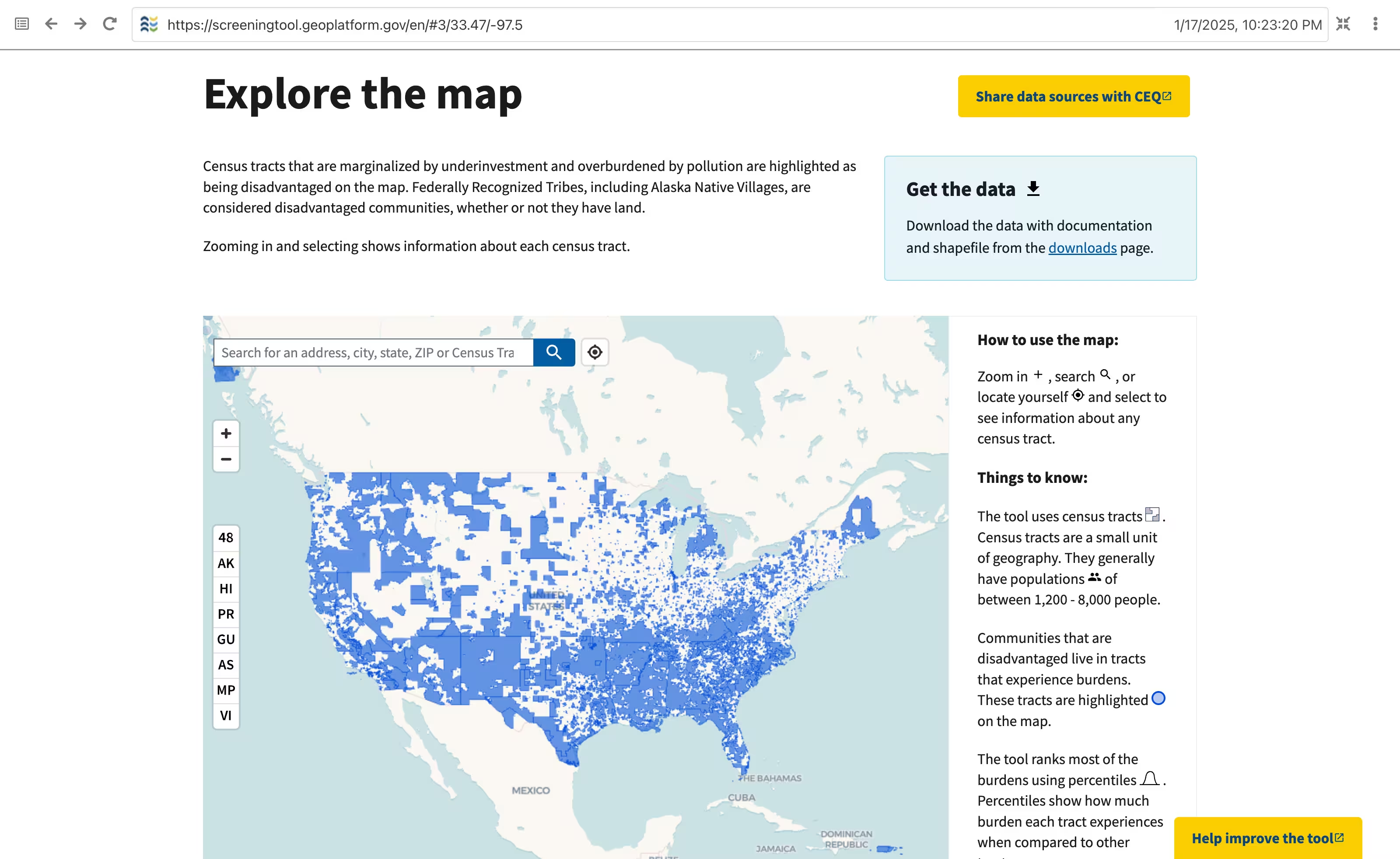

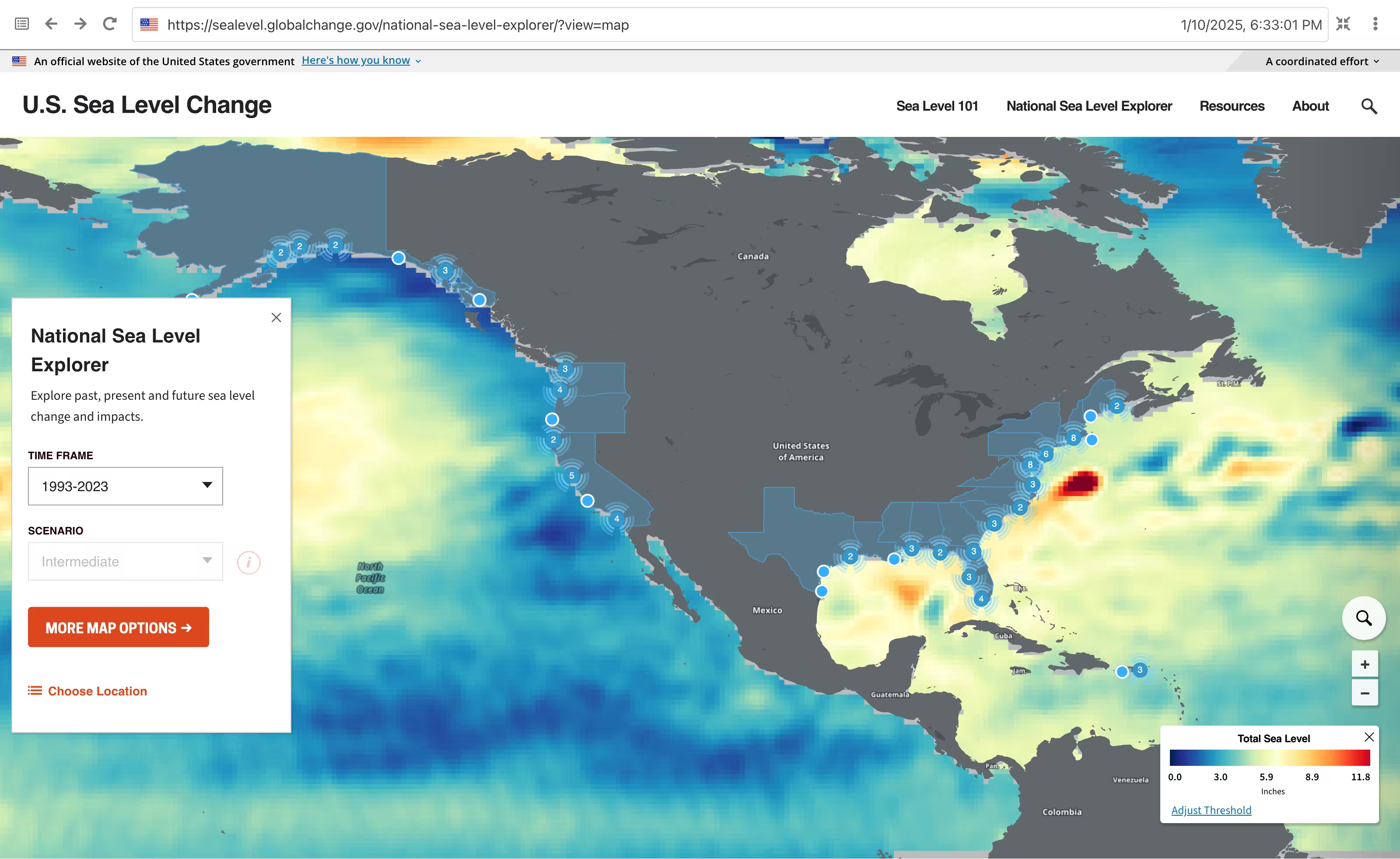

Climate & Economic Justice Screening Tool

Census tracts that are marginalized by underinvestment and overburdened by pollution

USAID

United States Agency for International Development Web Archive

EPA

Archive of the US Environmental Protection Agency website

CDC

Archive of the US Center for Disease Control's website

Upload existing archives

Best-in-class playback

Collaborative workspace

Get Started Today!

Sign up for a hosted plan and start archiving with zero setup.

Individual Free Trial

Our full suite of features, designed to scale with you. A perfect introduction to high fidelity, self-directed web archiving.

Free for 7 days, then $30/mo.

What’s included

- 180 minutes of execution time, with additional minutes available for purchase

- 100GB of disk space

- Up to 2,000 pages per crawl

View more Individual plans

Switch between Starter, Standard, and Plus plans at any time from your dashboard.

Standard

$60/mo

360 minutes of execution time,

220GB of disk space,

2 concurrent crawls,

and up to

5,000 pages per crawl.

Plus

$120/mo

720 minutes of execution time,

500GB of disk space,

3 concurrent crawls,

and up to

10,000 pages per crawl.

Pro

Flexible crawling quotas, storage space, concurrency, and dedicated support. Ideal for web archiving teams and small organizations.

Billed annually.

What’s included

- More execution time

- Additional disk space

- Custom pages per crawl

- Custom concurrent crawls

- Regional proxies

- Bundle options

- Extended storage options

- Optional dedicated support

Enterprise

Full service experience, tailored to your business needs. Let our team handle every stage of web archiving with precision and care.

Billed annually.

What’s included

- Dedicated account manager

- Expert web archivists

- Custom crawl & QA reports

- On-premise deployment options

- Flexible scalability

- Dedicated proxies

- Advanced support

- Customized solutions for capturing and preserving your online presence and marketing assets

Have custom requirements? Let’s chat

Resources

Introduction and reference for using Browsertrix to automate web archiving. User Guide

Instructions on how to install, set up, and deploy self-hosted Browsertrix. Self-Hosting Docs

Support

Professional support for on-premise deployment, maintenance, and updates. Get a Quote

We regularly read and respond to questions on the Webrecorder community forum. Help Forum

View open issues, submit a change request, or report something not working. GitHub Issues

FAQ

Can I capture dynamic websites?

Yes! Browsertrix loads each page it captures in a real web browser. This means you can capture content that other tools might miss, like parts of the page that are dynamically loaded using JavaScript. Generally, if it loads in a browser, Browsertrix is able to capture it!

If you’re having an issue archiving dynamic interactions on a site, we might be able to help in the Community Forum

Can I crawl webpages behind logins?

Yes! With Browsertrix, you can use browser profiles to log into websites, without exposing your password to the crawler. With an active browser profile, Browsertrix can securely crawl pages using your logged-in account.

We always recommend using dedicated accounts for crawling.

Can I crawl social media?

Generally, yes! Social media sites (Instagram, Facebook, X.com, etc.) are quite complex and therefore difficult to archive. You can use browser profiles to get behind logins, though successful crawling and accurate replay of social media sites is always a moving target.

We always recommend using dedicated accounts for crawling.

Time based billing? Can I run test crawls without being charged?

Unlike some other crawling platforms, Browsertrix bills users based on the elapsed time spent crawling a website, scaled by the amount of browser windows used. We don't offer free test crawls as running crawl workflows has a cost for us even if data isn't saved! To minimize execution time used, we recommend monitoring the crawl queue — especially in the beginning — and adding exclusions or other limits to ensure that a crawl doesn't use more execution time than anticipated.

What happens when I run out of crawling minutes?

When you run out of crawling minutes your crawling is paused, which means your running crawls are stopped and you can’t start new crawls. Don’t worry, nothing is deleted! You can upgrade your plan at any time in your Org Settings to increase your crawl minutes and continue running new crawls.

Can I upload WARC files?

Currently we only support uploading WACZ files, Webrecorder’s open standard format for portable self-contained web archives. WACZ files contain WARC data and conversion is lossless. To package your existing WARCs into WACZs, we recommend using our py-wacz command-line application.

Can I schedule automated crawls?

Yes, you can select the frequency of automated crawls on a daily, weekly, or monthly basis. Scheduled crawls will automatically launch even if you are not actively logged into Browsertrix.

Can I export my archived items?

Yes! Exporting content from Browsertrix is as easy as clicking the download button in the item’s actions menu. All content is downloaded as WACZ files, which can be unzipped to reveal their component WARC files with your favorite ZIP extractor tool. Your content will always be yours to export in standard formats.

Is my payment information safe? Can I try Browsertrix without providing payment info?

Yes, we use Stripe, a well-known and trusted payment processor, to handle our payments, so we never see your payment information in the first place. If you’re interested in trying Browsertrix without providing a payment method, or if you’d like to try Browsertrix for longer than 7 days, reach out and we’ll get back to you!

Should I self-host or use Webrecorder's cloud-hosted service?

Perhaps unsurprisingly, we would recommend that most users sign up for our instance. Browsertrix is a complex cloud-native application intended to scale depending on end-user needs. Outside of specific support contracts, we may not be able to support custom instance configurations and generally we focus on changes that target our infrastructure.

I’m allergic to web apps, can I run Browsertrix in my terminal?

You might want to check out Browsertrix Crawler, the command-line application responsible for all crawling operations in Browsertrix. For even bigger jobs that can’t run on your local machine, you can integrate the Browsertrix API into your own scripts.