Next Generation Web Archiving: Loading Complex Web Archives On-Demand in the Browser

I'm excited to present an exciting new milestone for Webrecorder, the release of six high-fidelity web archives of complex digital publications, accessible directly in any modern browser.

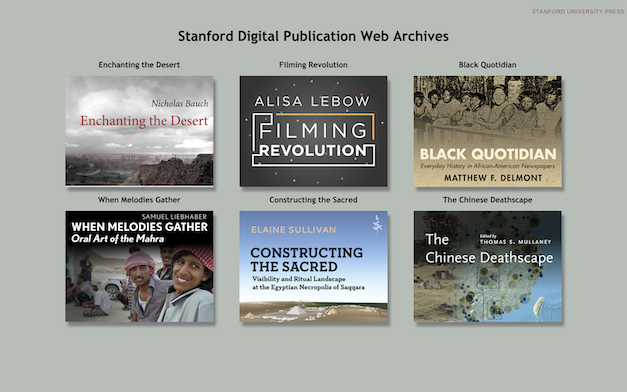

I’m excited to present an exciting new milestone for Webrecorder, the release of six high-fidelity web archives of complex digital publications, accessible directly in any modern browser. These projects represent the entire catalog of Stanford University Press’s Mellon-funded digital publications, and are the culmination of a multi-year collaboration between Webrecorder and Stanford University Press (SUP).

You can read more about this collaboration, and additional details on each of the publications on the corresponding blog post from SUP.

It has been really exciting to collaborate with SUP on these boundary-defining digital publications, which allow Webrecorder to also push the boundaries of what is possible with web archiving.

The web archives cover a variety of digital publication platforms and complexities: Scalar sites, embedded videos, non-linear navigation, 3D models, interactive maps. Four of the projects are also fully searchable with an accompanying full-text search index.

All six projects are preserved at or near full fidelity (or will be soon: two are in final stages of completion). But digital preservation and web archiving require long-term maintenance, and my goal, in addition to creating web archives, is to lower the barrier to web archive maintenance.

For example, maintaining the live versions of these publications requires hosting Scalar, or Ruby on Rails, or other infrastructure. Maintaining these web archives simply means hosting six large files, ranging from ~200MB to 17GB, and a static web site online.

To present fully accessible, searchable versions of these projects, only static web hosting is necessary. There is no ‘wayback machine’, no Solr, and no additional infrastructure. The web site page for this project is currently hosted as a static site via GitHub while the web archive files, ranging from ~200MB to 17GB, are currently hosted via Digital Ocean’s S3-like bucket storage.

In the near future, we plan to transfer hosting from Webrecorder to SUP, which will simply involve deploying the static site from GitHub in another location and transferring the static files from one cloud host to SUP’s digital repository, and that’s it! Once transferred, SUP will not require any additional expertise, beyond simple website hosting, to keep these web archives accessible.

My hope is that this will demonstrate a new model for more sustainable web archiving, allowing complex web archives to be stored and hosted alongside other digital objects.

Below, I’ll explain the technologies used in making this possible, and steps taken to create these archives.

ReplayWeb.page and WACZ

This is all possible due two new technologies from Webrecorder: The ReplayWeb.page system and a new collection format currently in development, the Web Archive Collection Zip (WACZ) format, explained in more detail below.

ReplayWeb.page, initially announced in June allows loading of web archives directly in the browser, and is a full web archive replay system implemented in Javascript.

To better support this project, the replayweb.page embedding functionality has been further improved, and now supports search features, including page title and text search.

For example, it is now possible to link directly to certain pages or search queries in an embedded archive:

ReplayWeb.page embeds can be added to any web page. Here are some further examples of ReplayWeb.page embeds presented on this site, including the Filming Revolution project:

Web Archive Collection Zip (WACZ) - More Compact, Portable Web Collections

An astute reader might wonder: loading web archives in the browser works great for small archives, but does this work for many GBs of data? Surely loading large WARCs in the browser will be slow and unreliable? And what about full text search and other metadata?

Indeed, loading very large WARC files, the standard format in web archiving, directly in the browser is not ideal, although ReplayWeb.page natively supports WARC loading as well. An individual WARC file is intended to be part of a larger collection, and lacks its own index. Given only a WARC file, the entire file must be read by the system to determine what data it contains.

Further, the WARC is not designed to store metadata: titles, description, list of pages, or any other information about the data to make it useful and accessible for users (though Webrecorder has managed to squeeze this data into the WARC in the past).

The Web Archive Collection Zip (WACZ) format attempts to address all of these issues.

The format provides a single file bundle, which can contain other files, including WARCs, indexes (CDX), page lists, and any other metadata. All of this data can be packaged into a single bundle, a standard ZIP file, which can then be read and created by existing ZIP tools, creating a portable format for web archive collection data.

The ZIP format has another essential property: It is possible to read parts of a file in a ZIP without reading the entire file! Unlike a WARC, a ZIP file has a built-in index of its content. Thus, it is possible to read a portion of the CDX index, then lookup the portion of a WARC file specified in the index, and get only what is needed to render a single page. The WACZ spec relies on this behavior and ReplayWeb.page takes full advantage of this functionality.

For example, the Filming Revolution project is loaded from a 17GB WACZ file, which contains 400+ Vimeo videos. Using only a regular WARC, the entire 17GB+ file would need to be downloaded, and this would take far too long for most users and would not be a good user experience.

Using WACZ, the system loads only the initial HTML page when loading the project. When viewing additional videos, they are each streamed on-demand. The system would only load the entire 17GB if they watched every single video in the archive, but a more casual user can get a glimpse of the project by browsing a few videos quickly.

Even though the web archive is presented in the browser, the browser need not download the full archive all at once!

(This requires the static hosting to support HTTP range requests, a standard HTTP feature supported since the mid-90s)

Creating WACZ Bundles

WACZ files are standard ZIP files and initially were created existing command-line tools for ZIP files.

To simplify the creation of WACZ files, a new command-line tool for converting WARCs to WACZ is being developed. The tool is not yet ready for production use, but you can follow the development on the repo.

The format is still be standardized, and if you have any suggestions or thoughts for what should be in the WACZ format, please open an issue on the main wacz-format repository on github or leave a comment on the discussion thread on the Webrecorder Forum.

Creating the SUP Web Archives: From Web Archives to Containers and Back

I wanted to share a bit more about how these six archives were created.

All of the archives were created with a combination of the Webrecorder Desktop App and Browsertrix and warcit with the exception of Enchanting the Desert, which was captured by SUP’s Jasmine Mulliken using the Webrecorder.io a few years ago and converted to WACZ and transferred to the new system for completeness.

At the beginning of our collaboration, Jasmine at SUP provided full backups of each project and my initial preservation approach was to attempt to run each project in a Docker container, and then overlay them with web archives. An early prototype of the system employed this approach, with ReplayWeb.page seamlessly routing to the WACZ or to a remote, containerized server.

Ultimately, one by one, I realized that, at least for these six projects, running the full server did not provide any additional fidelity. Most of the complexity was in the web archive anyway, and little was gained by the additional server component.

To run the server + web archive version, a Docker setup and Kubernetes cluster operation were necessary, significantly complicating the operations, while a web archive only version could be run with no extra dependencies or maintenance requirements.

The Filming Revolution project is a PHP-based single-page application, but contains 400+ Vimeo Videos. The PHP backend was not at all needed to run the single page application, but the Vimeo videos needed to be captured via a web archive. After obtaining the list of video ids from the data, it was just a matter of running a Browsertrix crawl to archive all of them at the right URL.

The three Scalar based projects, Black Quotidian, When Melodies Gather and Constructing the Sacred at first also seemed like they would require a running Scalar instance. However, using the Scalar APIs, along with warcit to get any resources in the local Scalar directory, it was possible to obtain a full list of URLs that needed to be crawled. Using Browsertrix to archive ~1000-1200 URLs from each Scalar project, and using Webrecorder Desktop App to archive certain navigation elements, resulted in a fairly complete archive.

Finally, The Chinese Deathscape is a Ruby on Rails application, with a database and dependent data sets. It would have take some time to migrate it to run in Docker and on Kubernetes — but that would not matter. The entire set is just a few pages, easily captureable with Webrecorder Desktop, while most of the complexity lies in the numerous embedded maps. The project contains four or five different maps of China, at different zoom level, loaded dynamically from OpenStreetMap and ArcGIS. To fully archive this project, I first used Webrecorder Desktop to chart out the ‘bounding box’ of the map, and attempt to automate capturing the remaining tiles via a script.

The Chinese Deathscape requires another QA pass to check on the map tiles, and the Constructing the Sacred requires another pass to capture 3D tiles of 3D models used in that project. Webrecorder tools are capable of capturing both, though it is currently a manual process.

Overall, it turned out that running the server in a container did not help with the ‘hard parts’ of preservation, with perhaps one exception: full text search.

Embedded Full-Text Search

The Scalar projects all include a built-in search, and I wanted to see if a search could be implemented entirely client-side, as part of the ReplayWeb.page system.

By using Browsertrix to generate a full-text search index over the same pages that were crawled, it is possible to create a compact index that can be loaded in the browser, using the FlexSearch javascript search engine. Over the last week, I was able to add an experimental full-text search index to the three Scalar projects and even Chinese Deathscape. This results in a nearly-100% fidelity web archive. Since the live Chinese Deathscape does not come with a search engine, the web archive is arguably more complete than the original site.

Search can be initiated by entering text into the archive’s location bar, as shown in the video below.

The following video demonstrates searching for “Shirley” in Black Quotidian web archive to a page containing the video of Shirley Chisholm:

Future Work and Improvements

One of the remaining challenges is how to create better automation around capturing complex projects. I believe a combined automated + manual capture by a user familiar with a project will be necessary to fully archive such complex digital publications.

For example, archiving Scalar and list of Vimeo videos can be easily automated, thanks to the existing of concise APIs to discover the page list, but some pages may require a manual ‘patching’ approach using interactive browser-based capture. Archiving map tiles and 3D models may continue to be a bit more challenging, as the discovery of tiles may prove to be complex or always require a human driver.

Other projects, with more dynamic requirements beyond text search, may yet require a functioning server for full preservation. For these projects, the ReplayWeb.page system can provide an extensible mechanism for routing web replay between a web archive and a remote running web server.

A major goal for Webrecorder is to create tools to allow anyone to archive digital publications into their own portable, self-hostable WACZ files. The plan is to release better tools to further automate capture of difficult sites, including server preservation, combined with the user-driven web archiving approach that remains the cornerstone of Webrecorder’s high fidelity archiving.

If you have any questions/comments/suggestions about this work, please feel free to reach out, or better yet, start a discussion on the Webrecorder Community Forum